Multivariate analysis of variance

Multivariate analysis of variance (MANOVA) is a statistical test procedure for comparing multivariate (population) means of several groups. Unlike ANOVA, it uses the variance-covariance between variables in testing the statistical significance of the mean differences.

It is a generalized form of univariate analysis of variance (ANOVA). It is used when there are two or more dependent variables. It helps to answer : 1. do changes in the independent variable(s) have significant effects on the dependent variables; 2. what are the interactions among the dependent variables and 3. among the independent variables.[1]

Where sums of squares appear in univariate analysis of variance, in multivariate analysis of variance certain positive-definite matrices appear. The diagonal entries are the same kinds of sums of squares that appear in univariate ANOVA. The off-diagonal entries are corresponding sums of products. Under normality assumptions about error distributions, the counterpart of the sum of squares due to error has a Wishart distribution.

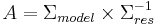

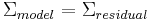

Analogous to ANOVA, MANOVA is based on the product of model variance matrix,  and inverse of the error variance matrix,

and inverse of the error variance matrix,  , or

, or  . The hypothesis that

. The hypothesis that  implies that the product

implies that the product  [2] . Invariance considerations imply the MANOVA statistic should be a measure of magnitude of the singular value decomposition of this matrix product, but there is no unique choice owing to the multi-dimensional nature of the alternative hypothesis.

[2] . Invariance considerations imply the MANOVA statistic should be a measure of magnitude of the singular value decomposition of this matrix product, but there is no unique choice owing to the multi-dimensional nature of the alternative hypothesis.

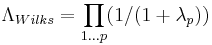

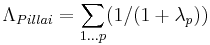

The most common[3][4] statistics are summaries based on the roots (or eigenvalues)  of the

of the  matrix:

matrix:

- Samuel Stanley Wilks'

distributed as lambda (Λ)

distributed as lambda (Λ) - the Pillai-M. S. Bartlett trace,

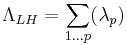

- the Lawley-Hotelling trace,

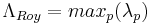

- Roy's greatest root (also called Roy's largest root),

Discussion continues over the merits of each, though the greatest root leads only to a bound on significance which is not generally of practical interest. A further complication is that the distribution of these statistics under the null hypothesis is not straightforward and can only be approximated except in a few low-dimensional cases. The best-known approximation for Wilks' lambda was derived by C. R. Rao.

In the case of two groups, all the statistics are equivalent and the test reduces to Hotelling's T-square.

References

- ^ Stevens, J. P. (2002). Applied multivariate statistics for the social sciences. Mahwah, NJ: Lawrence Erblaum.

- ^ Carey, Gregory. "Multivariate Analysis of Variance (MANOVA): I. Theory". http://ibgwww.colorado.edu/~carey/p7291dir/handouts/manova1.pdf. Retrieved 2011-03-22.

- ^ Garson, G. David. "Multivariate GLM, MANOVA, and MANCOVA". http://faculty.chass.ncsu.edu/garson/PA765/manova.htm. Retrieved 2011-03-22.

- ^ UCLA: Academic Technology Services, Statistical Consulting Group.. "Stata Annotated Output -- MANOVA". http://www.ats.ucla.edu/stat/stata/output/Stata_MANOVA.htm. Retrieved 2011-03-22.

See also

External links

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||